As AI technologies increasingly permeate rheumatology—from diagnostic aids to predictive models—the need for a grounded, ethical framework has never been more urgent. This article unpacks the key dilemmas clinicians must navigate, offering clarity on where—and how—we should draw the line.

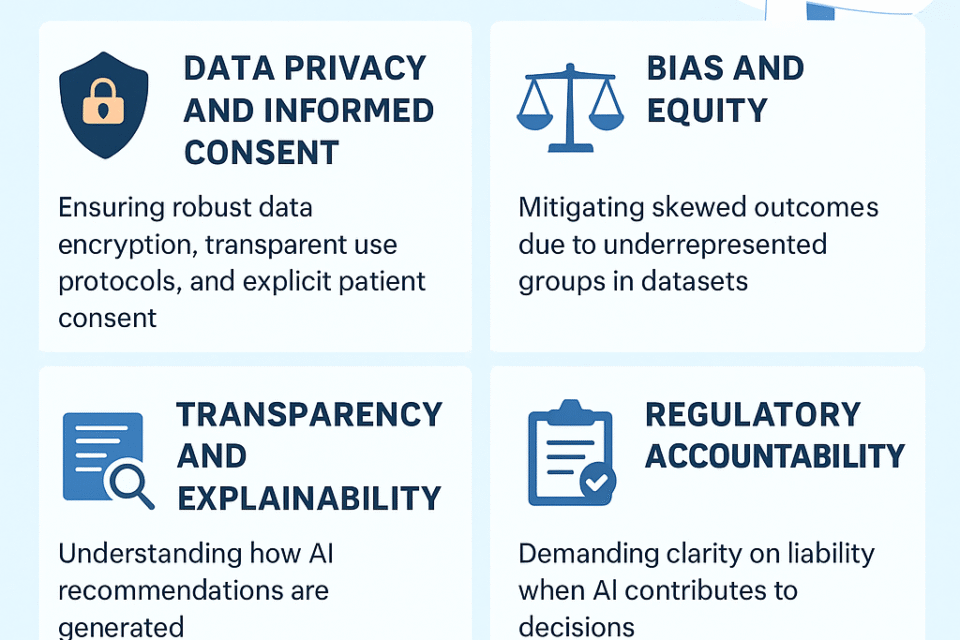

1. Data Privacy and Informed Consent

AI systems thrive on vast data, but such repositories often contain deeply personal information—from genetic markers to nuanced disease histories. Ethical deployment means ensuring robust data encryption, transparent use protocols, and explicit patient consent. As highlighted in Clinical Rheumatology, AI’s use in rheumatology raises concerns about patient confidentiality and privacy that must be addressed within ethical and legal boundaries.

Source: https://link.springer.com/article/10.1007/s10067-020-04969-w

2. Bias and Equity

AI models perform best when trained on representative data. Unfortunately, rheumatology datasets often overrepresent certain demographics, risking skewed outcomes for underrepresented groups. For instance, outcome predictions for diseases like lupus may be less accurate for Black or Hispanic patients if training data lacks diversity.

Source: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC11748787/

Moreover, broader healthcare AI findings remind us of pitfalls seen in other specialties—such as diagnostic tools underperforming for minority patients due to historical data bias.

Source: https://www.mdpi.com/2077-0383/14/5/1605

3. Transparency and Explainability

Many AI models, especially those built on deep learning, act as “black boxes”—their decision-making opaque, even to developers. In rheumatology, where trust and accountability are essential, clinicians must be able to understand how an AI recommendation was generated.

Source: https://www.emjreviews.com/rheumatology/article/current-applications-and-future-roles-of-ai-in-rheumatology-j170123/

4. Regulatory Accountability

AI in healthcare remains under-regulated globally. While frameworks like TRIPOD-AI, CONSORT-AI, and the upcoming EU AI Act offer guidance, gaps persist—particularly around post-market monitoring and AI’s evolving nature. Clinicians should demand clarity on liability when AI contributes to diagnostic or therapeutic decisions.

Sources:

-

Reporting guidelines: https://en.wikipedia.org/wiki/Artificial_intelligence_in_healthcare

-

EU AI Act ethics table (rheumatology): https://www.ncbi.nlm.nih.gov/pmc/articles/PMC12007595/

5. Human Oversight and Responsibility

AI must enhance—not replace—clinical judgment. The physician’s familiarity with a patient’s broader context, values, and disease trajectory remains indispensable. Overreliance on AI risks eroding this critical human element.

Sources:

6. Bias Mitigation

Developers and clinicians must collaborate to ensure AI systems are fair and inclusive. This includes curating diverse datasets, implementing bias-aware algorithms, and conducting regular performance audits across population subgroups.

Source: https://www.mdpi.com/2077-0383/14/5/1605

7. Global Equity and Access

AI in rheumatology also holds promise in underserved settings, enabling early detection and tele-rheumatology services. But this progress must be equitable—without creating or deepening divides, especially in low-resource regions.

Source: https://onlinelibrary.wiley.com/doi/full/10.1111/1756-185X.70086

In Summary

The promise of AI in rheumatology is real—but so are the ethical challenges. Key steps for responsible implementation include:

-

Ensuring patient privacy and clear consent

-

Mitigating bias to uphold fairness

-

Requiring explainable and transparent models

-

Holding systems to regulatory and accountability standards

-

Maintaining human oversight and preserving empathy in care

-

Bridging access gaps to serve all patient communities

Drawing the ethical line means embracing AI’s innovations while protecting the core human elements of trust, judgement, and equitable care that define rheumatology.